How Does AI Generate Images A Simple Guide

Published on 2025-10-27

So, how does an AI actually create an image from scratch? It all starts with the AI learning from a massive library of existing pictures—we're talking billions of them. It then uses that vast knowledge to create something entirely new based on a text prompt you provide.

Think of it like a digital artist who has spent a lifetime studying every painting, photograph, and sketch ever made. Now, when you ask for something specific, it can draw on all that experience to paint a masterpiece just for you. The two main techniques making this magic happen are diffusion models and Generative Adversarial Networks (GANs).

Your Quick Answer To How AI Generates Images

At its heart, AI image generation is all about recognizing patterns and then creatively putting them back together. An AI doesn't "know" what a cat is in the way we do. Instead, it has analyzed countless photos of cats to learn the statistical patterns of pixels that make up ears, whiskers, and fur.

When you ask for a "futuristic cat in a spacesuit," the AI combines these learned patterns—"cat," "futuristic," and "spacesuit"—to build a brand-new image that matches your description.

This technology has taken off in a huge way. It's estimated that a mind-boggling 34 million AI images are created every single day. Tools like Midjourney and DALL·E have helped generate over 15 billion images since 2022, which gives you a sense of the incredible scale we're talking about.

Two Core Methods of AI Image Generation

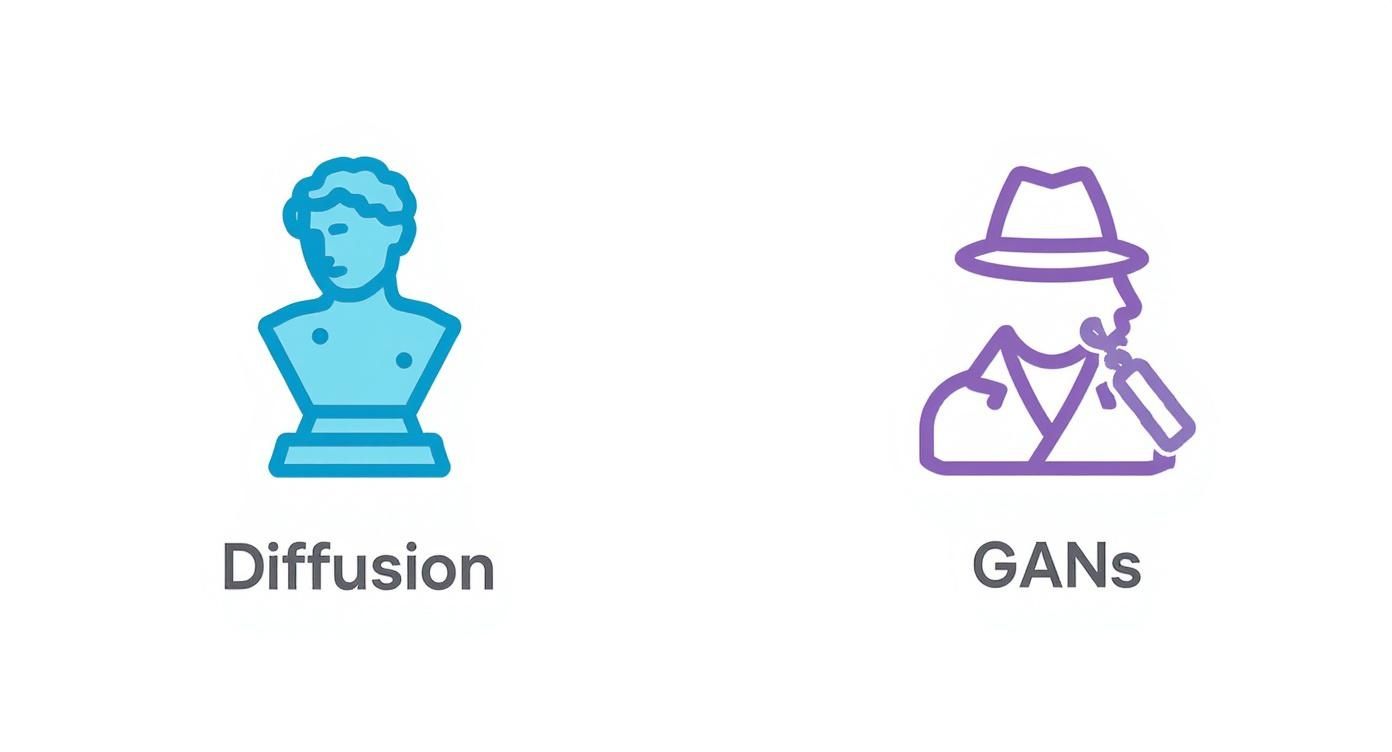

To really get how AI creates images, we need to look at the two main approaches. Each has its own clever way of getting the job done.

Here’s a simple breakdown of how these two powerhouse techniques compare. Think of them as two different artistic philosophies for creating something from nothing.

| Method | Core Idea | Simple Analogy |

|---|---|---|

| Diffusion Models | Starts with digital "noise" (like TV static) and gradually refines it step-by-step into a clear image. | A sculptor starting with a rough block of marble and slowly chipping away to reveal a detailed statue inside. |

| GANs | Two AIs compete: a "Generator" makes images, and a "Discriminator" tries to tell if they're real or fake. | An art forger trying to create a painting so convincing that an expert art detective can't spot the fake. |

As you can see, one method is about careful refinement, while the other is about a high-stakes competition. Both lead to stunningly realistic results.

This infographic really helps visualize the difference: diffusion sculpts an image out of chaos, while GANs use a competitive back-and-forth to get better and better.

Once you have a handle on these concepts, the next logical step is finding the best AI for image creation that fits your specific needs and creative style.

How Diffusion Models Create Art From Chaos

If you've played around with any of the big AI image generators lately, you've seen a diffusion model in action. This is the magic behind many of the most popular tools, and its approach is fascinating because it’s completely backward from how you'd think art is made. It learns to create by first mastering destruction.

Think about it like this: take a perfect, high-resolution photo of a cat. Now, start adding a little bit of digital "fuzz" or static to it, step by step. If you keep doing this, eventually the cat will completely disappear into a mess of random pixels. That’s the "destruction" part.

The AI trains on millions of images, learning how to perfectly reverse that process. It doesn't just wipe the noise away in one go. Instead, it learns how to walk backward, carefully figuring out what the image looked like just before that last little bit of static was added.

From Chaos to Cohesion

Okay, so how does learning to remove noise help an AI create a picture of "a robot eating spaghetti" from scratch?

Well, the AI starts with a blank canvas of pure, random noise. It's just digital chaos. But your text prompt acts as a tour guide. It tells the model what to look for as it starts cleaning up that chaos.

With your prompt in mind, the model begins the denoising process. At every single step, it nudges the pixels around, essentially asking itself, "Is this looking a bit more like a robot? Are those shapes starting to look like spaghetti?" It does this over and over, sometimes hundreds or thousands of times.

This gradual refinement is what makes diffusion models so powerful. The AI isn't just pulling a finished image out of a database. It's sculpting—carefully chipping away at the noise to reveal the image that matches your description, pixel by pixel.

It's a lot like a sculptor staring at a rough block of marble. The statue is already "in there," and their job is to methodically remove all the stone that isn't part of the final form. The diffusion model does the exact same thing with digital noise, using your words as its chisel.

The Role of Text Prompts

Your prompt is everything. It's not just a friendly suggestion; it's the map the AI uses to navigate the infinite visual possibilities hiding in that initial field of static. Through its training, the model has built deep connections between words ("robot," "spaghetti," "eating") and the visual patterns that represent them.

The whole creative process unfolds in a few key stages:

- Initial Noise Generation: The AI kicks things off by generating a field of random pixels. This is the raw material, the "marble block."

- Text Encoding: Your prompt is converted into a numerical format—a set of coordinates on the map—that the AI can actually use.

- Iterative Denoising: Guided by your prompt, the model predicts and removes a tiny amount of noise in each step, steering the emerging image closer and closer to your description.

- Final Image Output: After many refinement cycles, the noise is gone, and what's left is a clear image that should match what you asked for.

This step-by-step method is why these models can produce such detailed and creative images. Each tiny adjustment builds on the last, allowing incredible complexity to emerge from what started as total randomness.

Understanding GANs: The Forger and The Detective

https://www.youtube.com/embed/8L11aMN5KY8

Before diffusion models stole the spotlight, a different kind of AI rivalry was busy creating some of the most realistic images we’d ever seen. The technique is called a Generative Adversarial Network, or GAN, and it’s best explained as a high-stakes game between an art forger and an art detective.

Inside a GAN, you have two dueling neural networks:

- The Generator (The Forger): Its entire purpose is to create convincing fake images out of thin air. It starts by generating total nonsense—basically, digital noise—and tries to shape it into something that looks real.

- The Discriminator (The Detective): This is the expert. It has been shown thousands of real images and has learned to be an incredibly tough critic. Its job is simple: look at any image it's given and call it "real" or "fake."

This setup kicks off a fascinating cat-and-mouse game. At the beginning, the Generator is clumsy, and its creations are laughably bad. The Discriminator spots them as fakes instantly.

But here’s the clever part. Every time the Discriminator says "fake," the Generator gets a hint about why it failed. It takes that feedback, learns from its mistake, and tries again, making a slightly better forgery the next time around.

The Cycle of Improvement

This constant back-and-forth is what makes GANs so powerful. As the Generator (the forger) gets better at making fakes, the Discriminator (the detective) has to get smarter to keep up. It starts noticing smaller and smaller imperfections—subtle issues with shadows, textures, or shapes that it would have missed before.

This puts immense pressure on the Generator to improve its craft. It's not just aiming for a fixed target; it's trying to fool an opponent that's getting more intelligent with every round.

The genius of the GAN model is that this adversarial process automatically pushes the system toward creating hyper-realistic results. The Generator isn't just trying to match a static goal; it's trying to fool a constantly evolving opponent.

Eventually, the Generator becomes so good that it can trick the Discriminator about 50% of the time. At this point, the detective is essentially guessing, unable to reliably tell the difference between a real photo and an AI-generated one.

The images produced at this stage are often incredibly lifelike, all thanks to thousands of rounds of this competitive game. This groundbreaking approach was a major leap forward in AI's creative abilities.

Turning Your Words Into Stunning Visuals

Knowing how the technology works is one thing. Actually controlling it is a whole different ball game. The real secret to getting the perfect AI-generated image isn't in the model itself—it’s in your text prompt.

Think of your prompt as the director's script for a movie or the artist's initial sketch. It’s the instruction manual that tells the AI exactly what to create. The skill of writing these instructions is called prompt engineering.

This is where you learn to guide the AI with real precision. It’s the difference between asking for "a dog" and getting a generic, stock-like photo, versus asking for "a golden retriever puppy playing in a field of daisies, soft morning light, hyperrealistic." The first is a vague suggestion; the second is a detailed creative brief.

Mastering this communication is how you unlock the AI’s true potential and turn a fuzzy idea in your head into a stunning, specific visual.

The Anatomy Of A Great Prompt

So, how do you build a prompt that gets the AI to see what you see? It starts by breaking your idea down into key pieces. A well-crafted prompt usually includes a few core elements that give the model a clear roadmap.

To get the best results, try to include these four pillars in your request:

- Subject: What’s the main focus? Be specific. Instead of "a car," try "a vintage red convertible."

- Style: What artistic feel are you going for? This could be anything from "photorealistic" or "cinematic" to "impressionist painting" or "anime style."

- Composition & Lighting: How should the scene be framed and lit? Use terms like "wide-angle shot," "close-up," "dramatic lighting," or "golden hour."

- Details & Modifiers: Add extra keywords to polish the image. Words like "highly detailed," "4K," "vibrant colors," or "ethereal glow" can make a huge impact.

When you weave these elements together, you build a comprehensive request that leaves very little to chance. You can see this in action with fun tools like AI baby generators, which rely on specific user inputs to create unique images.

Your prompt is a conversation with the AI. The more detail and clarity you provide, the better the AI can understand and execute your creative vision. A simple prompt gets a simple image; a rich prompt gets a rich image.

Improving Your Prompts From Simple To Specific

Let's see just how much of a difference a few extra details can make. The quality of your image improves dramatically as you add more layers to your prompt. Watch how a basic idea transforms with more information.

| Element | Simple Prompt | Detailed Prompt Example |

|---|---|---|

| Subject | "a car" | "a vintage red convertible" |

| Action | "driving on a coastal road" | |

| Setting | "at sunset" | |

| Style | "cinematic photo, highly detailed" | |

| Lighting | "warm golden hour lighting" | |

| Final Prompt | "a car" | "A vintage red convertible driving on a coastal road at sunset, cinematic photo, highly detailed, warm golden hour lighting" |

See the difference? Combining these elements helps the model sift through billions of possibilities to find the one that perfectly fits your request. This is the core of how AI can generate such specific and compelling images.

This skill is becoming essential across many modern AI content creation tools, which now rely on prompt engineering to produce everything from images to ad copy. It’s a fundamental part of creating in the digital world today.

As incredible as the technology behind AI image generation is, it's definitely not flawless. If you jump in without knowing its limits, you're bound to get frustrated.

Knowing the common hurdles ahead of time helps you set realistic expectations and, more importantly, learn how to work around the AI's weak spots to get much better results.

One of the most famous (and sometimes comical) issues is the AI's struggle with rendering realistic hands. Models are notorious for spitting out images with extra fingers, bizarre proportions, or hands bent in impossible ways. This happens because hands are just so complex, and their vast range of positions isn't as well-represented in training data as something like a face. The AI just hasn't seen enough good examples to really nail the anatomy.

Along the same lines, getting an AI to create readable text inside an image is a huge challenge. While the models can produce shapes that look like letters, the result is often just garbled nonsense. The AI sees text as a visual pattern, not a language system, which is why you get jumbles that look like a made-up alphabet.

Technical and Artistic Flaws

Beyond just hands and text, you'll run into other artistic and technical glitches that can instantly ruin an otherwise great image. These are the kinds of flaws that can make a picture unusable for any professional work, and even the best models out there still trip over them.

Keep an eye out for these common issues:

- Inconsistent Shadows and Lighting: You'll see things like an object casting a shadow in the completely wrong direction or the lighting on a person not matching the lighting of the background.

- Incorrect Scale and Proportions: Ever see an image of a person holding a coffee mug the size of their head? That's a classic sign the AI doesn't quite grasp how objects relate to each other in the real world.

- Low Output Resolution: Many models still have fairly low resolution limits. They often top out at 2048 x 2048 pixels, and you’ll frequently find them capping at 1024 x 1024 pixels. This can be a major roadblock if you need something for professional printing or a large digital display. For a deeper dive, you can explore more about the current state of AI image generation and its limitations on GetADigital.com.

Knowing these limitations isn’t about being critical of the tech—it's about learning to use it smarter. For instance, if you know an AI is terrible with text, you can plan from the start to add it later yourself in a tool like Photoshop or Canva.

These hurdles are a great reminder that while AI is an amazing creative partner, it doesn't have the deep, nuanced understanding of a human artist. To get the most out of these tools, they still need a human touch, especially when you're trying to fit them into a professional process.

If you want to learn more about structuring your creative process with these tools in mind, check out our guide on building an effective content creation workflow. Recognizing these flaws is the first step to becoming a much more effective creator.

How AI Is Reshaping Creative Industries

AI image generation isn't just a cool new toy; it's fundamentally changing how creative professionals get their work done. This isn't some distant future—it’s happening right now in marketing, design, and entertainment, where AI is becoming a powerful creative partner.

For marketing teams, the biggest shift is speed. An idea for a new ad campaign that used to take weeks of back-and-forth with photographers and designers can now be visualized in minutes. This means you can rapidly test different concepts to see what resonates with your audience, leading to smarter, more effective campaigns.

This kind of efficiency is a game-changer for businesses of all sizes. Small shops and solo entrepreneurs can now produce professional-looking visuals without needing a huge budget or a dedicated design team. It really levels the playing field.

AI is making high-quality visual creation accessible to everyone. It's becoming a standard tool that frees up creatives to focus on strategy and the big picture, letting the machine handle the initial visual heavy lifting.

Practical Applications Across Industries

While the impact of AI image generators varies from one field to another, the core benefits—speed and accessibility—are universal. Creatives are finding clever ways to weave these tools into their daily workflows, taking the friction out of once-tedious tasks.

Here are a few real-world examples:

- Graphic Designers are using AI to instantly generate mood boards and initial concepts. This lets them explore dozens of creative directions in the time it used to take to flesh out just one.

- Social Media Managers can create a nearly endless supply of unique visuals for their posts, like the ones you see in eye-catching AI carousels, keeping their content feeds fresh and engaging.

- Game Developers can whip up placeholder assets and character concepts at incredible speeds, allowing them to prototype and test new ideas much faster than before.

The adoption numbers really tell the story. It's estimated that by 2025, a staggering 73% of marketing departments will be using generative AI for tasks like image creation. This trend is part of a market expected to blow past $66.62 billion by the end of 2025, proving just how deeply these tools are integrating into professional work. For more insights, check out the rise of generative AI at Mend.io.

Burning Questions About AI Images

As you start playing with AI image generators, a few big questions almost always pop up. It's totally normal. Let's dig into some of the most common practical and ethical issues you'll run into.

Can I Actually Use The Images I Make?

This is a big one, and honestly, it's complicated. For just messing around and personal projects, you're pretty much in the clear. But when money gets involved, things get murky.

The legal world is still catching up. In the U.S., for instance, the Copyright Office has stated that work made only by an AI can't be copyrighted because it lacks human authorship. This means the cool image you just generated might not have the same legal shield as a photo you took yourself.

Some platforms, like Midjourney, have terms that grant you commercial rights, but you absolutely have to read the fine print on whatever service you're using before you put an AI image on a product or in an ad.

Is It Wrong To Copy An Artist's Style With AI?

This is probably the single most heated debate in the AI art community. These models get smart by studying millions of images, including the entire life's work of countless artists. So when you type "in the style of Van Gogh," you're asking the AI to copy a very specific, human-developed signature.

Many artists are not happy about this, and it's easy to see why. Their work is being used to train a machine, often without their permission or getting paid for it.

Here’s how to think about it:

- Inspiration vs. Imitation: There's a difference. Artists have always learned from each other, but AI can produce uncanny copies at an incredible speed. It blurs the line between being inspired and just outright mimicking.

- An Artist's Livelihood: If anyone can generate a "new" piece in a famous artist's style for a few cents, what does that do to the value of the artist's actual work? It's a real concern.

- Choosing Ethical Tools: Some companies are trying to do it right. Adobe Firefly, for example, only trains its model on its own licensed stock library and public domain images to sidestep this whole issue.

It really comes down to consent. Just because the technology can copy a style doesn't mean it's the right thing to do, especially for commercial projects. Think of it as a tool for new ideas, not a machine for cloning someone else's creativity.

What's The Real Difference Between Midjourney and DALL-E?

You'll hear these two names all the time, and while they both create images from text, they have very different personalities.

Think of Midjourney as the artist. It's famous for creating stunning, highly stylized, and often beautiful, painterly images. If you're looking for something with a rich, atmospheric vibe, Midjourney is usually the go-to.

DALL-E is more like the literalist engineer. It excels at photorealism and is ridiculously good at understanding weird, complex prompts with pinpoint accuracy. If you need a realistic photo of something that doesn't exist, like "a raccoon astronaut riding a dolphin," DALL-E is often your best bet.

Ready to create compelling LinkedIn content complete with AI-generated visuals? autoghostwriter gives you the tools to build scroll-stopping posts that get noticed. Start crafting high-quality content that drives real engagement by visiting autoghostwriter.com today.